Physical annealing is a three stage process that has been known and used for shaping metals since about 5000 B.C. [Enc00b]. Any annealing process consists of three stages: heating to the desired temperature, holding at that temperature, and cooling, usually to room temperature [Ame]. Glass, crystals [Enc00a], and other materials are also annealed to render them less brittle and more workable [Col94].

Sequences of times and temperatures, called `annealing' or `cooling' schedules, are critical in two ways: If the rate of the temperature change between the outside and inside of the piece is too great, temperature gradients and internal stresses may be induced that may lead to warping or cracking. Also, the actual annealing time must be long enough to allow for any necessary transformations to take place [Ame].

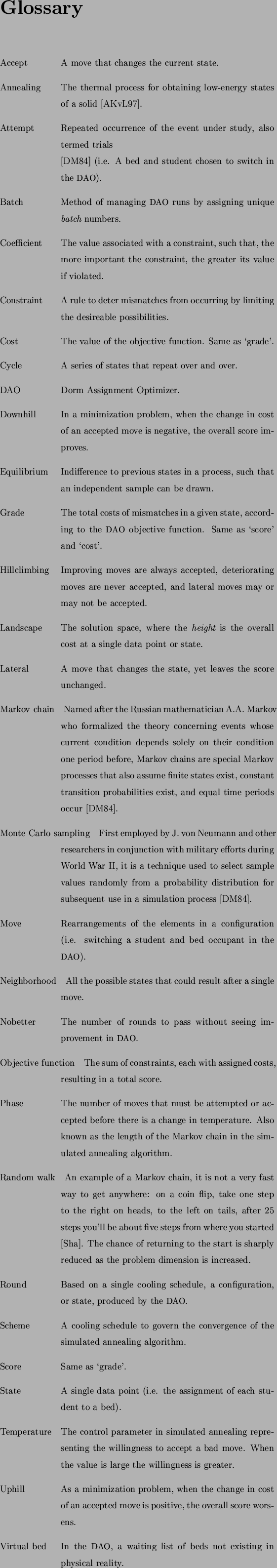

Using Monte Carlo sampling techniques, the physical annealing process has been modeled successfully by computer simulation methods. The analogy between a physical many-particle system and a combinatorial optimization problem is based on the following equivalences [AKvL97]:

The Metropolis algorithm for Monte Carlo is the grandfather of

simulated annealing algorithms, proposed in 1953 in [MRR$^+$53]. Its

significance is highlighted by its selection as the top algorithm with

the greatest influence on the development of science and engineering

in the ![]() Century for offering ``an efficient way to stumble

towards answers to problems too complicated to solve exactly''

[DS00].

Century for offering ``an efficient way to stumble

towards answers to problems too complicated to solve exactly''

[DS00].

Kirkpatrick and Gelatt first tested simulated annealing on the

travelling salesman problem, finding locally optimal solutions for  up to 6000 sites [KGV83]. At that time the exact solution had

been obtained for 318 sites. Annealing was also used to optimize the

design of complex integrated circuits by arranging hundreds of

thousands of circuit elements to minimize chip space requirements and

to reduce interference among their connecting wires [KGV83].

up to 6000 sites [KGV83]. At that time the exact solution had

been obtained for 318 sites. Annealing was also used to optimize the

design of complex integrated circuits by arranging hundreds of

thousands of circuit elements to minimize chip space requirements and

to reduce interference among their connecting wires [KGV83].

For certain NP-hard problems, where poor local extrema abound but are not far from good local extrema, the simulated annealing technique outperforms straightforward iterative improvement, at the cost of much longer running times [MS91].

It has been applied to many problems, ranging from the practical to theoretical [AKvL97]. A related, yet more complex, example is academic course scheduling at Syracuse University [EFC98]. Other NP applications include signal and image processing, task allocation, network design, graph coloring and partitioning [JAM91,JAM89], and molecular analysis.

Four ingredients are needed to apply simulated annealing to new problems [KGV83]:

A concise description of the system configuration; A random generator of moves or rearrangements of the elements; A quantitative objective function representing all the trade-offs; An annealing schedule of temperatures and length of ti

Annealing generally begins by reading in an existing solution to the

problem or generating a random solution. Once the starting temperature

and termination criterion have been established (perhaps by examining

a large number of solutions) the actual annealing begins. At each

iteration, the current solution is randomly perturbed to create a new

solution (this step is often referred to as a move). The change in

cost ![]() from the previous to the new state is calculated, and

the move is accepted with probability

from the previous to the new state is calculated, and

the move is accepted with probability

its Boltzmann probability factor (or sigmoid), where ![]() is

temperature.

is

temperature.

The probabilities for different changes in cost at different

temperatures intersect at the center point (0,.5) as shown in Figure

1.1, where there's a 50-50 chance of accepting a move when

no change in score occurs whatever the temperature. When the

temperature is high, the solid ![]() line shows there is almost

always a 50-50 chance of accepting a move regardless of the cost. As

the temperature decreases, the bold

line shows there is almost

always a 50-50 chance of accepting a move regardless of the cost. As

the temperature decreases, the bold ![]() line approaches a

probability of one as the change in cost decreases and a probability

of zero as the change in cost increases, showing we are very likely to

take improving moves and less likely to take deteriorating moves.

line approaches a

probability of one as the change in cost decreases and a probability

of zero as the change in cost increases, showing we are very likely to

take improving moves and less likely to take deteriorating moves.

In general, temperature is used to scale differences in height of the landscape. Raising the temperature flattens a rugged landscape by a greater willingness to accept worse moves. Small irregularities on a smooth landscape are accentuated by lowering the temperature and accepting mostly improving moves. Landscapes are depicted graphically in .

The inherent problem with only accepting better moves, hillclimbing, is that we might get stuck in a valley that can't be escaped by taking downhill moves. The willingness to move uphill to a worse position gives simulated annealing the advantage of possibly escaping from local minima in hope of finding a deeper valley farther away.

If the move is not accepted, the previous state is restored. After a certain number of moves, the temperature is decreased -- thus decreasing the probability of accepting uphill moves. When no downhill (better) moves exist in the neighborhood, we have reached a local minimum. This does not imply we've reached a global minimum solution, however.

Finally, the algorithm terminates when some specified stopping criterion is met -- e.g., when no improvement has been found for some number of moves.

One challenge with annealing is the determination of the parameters controlling the execution of the algorithm [Lee94]. It is still an art [AKvL97].